AI

AI

AI

AI

AI

AI

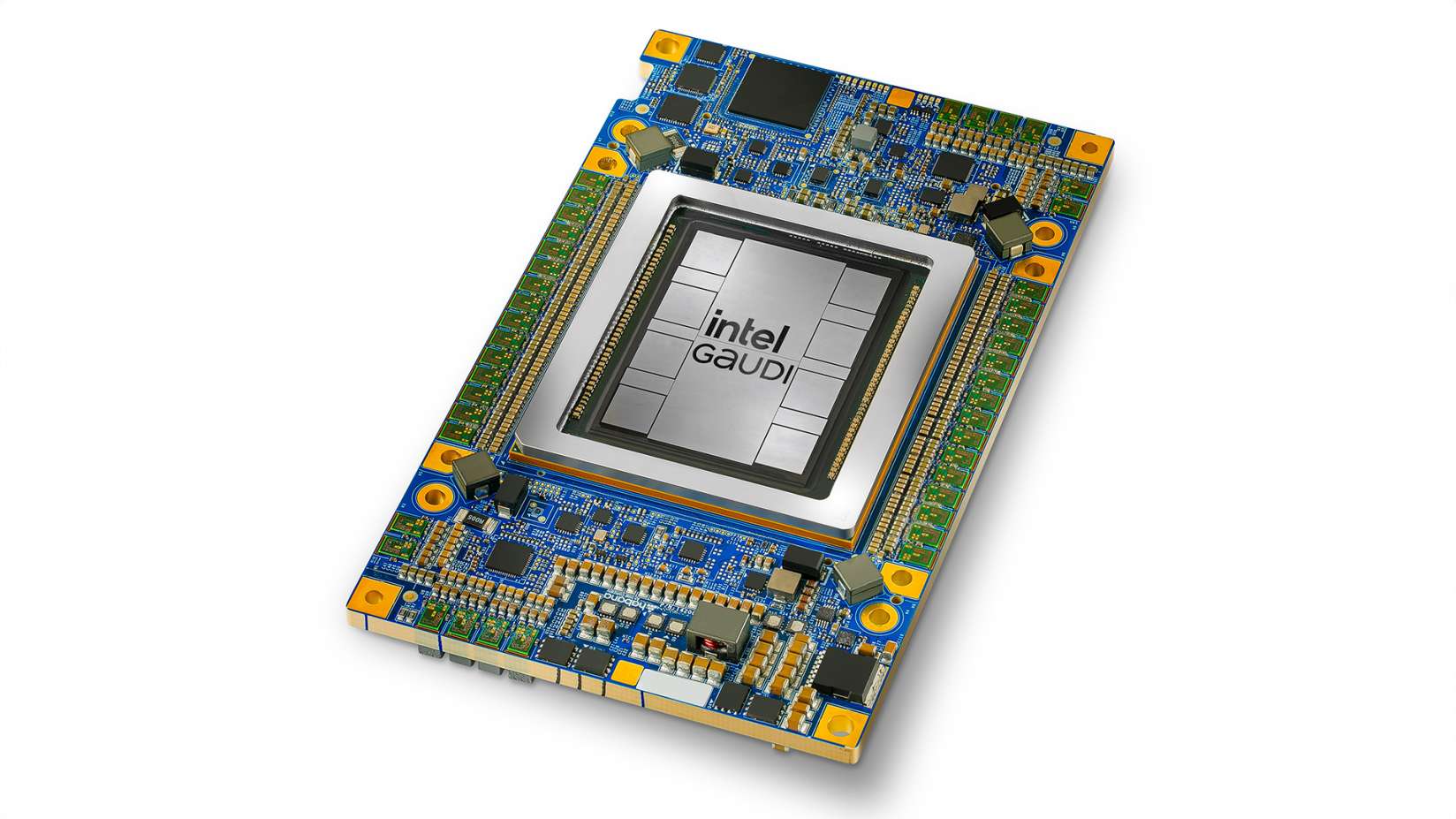

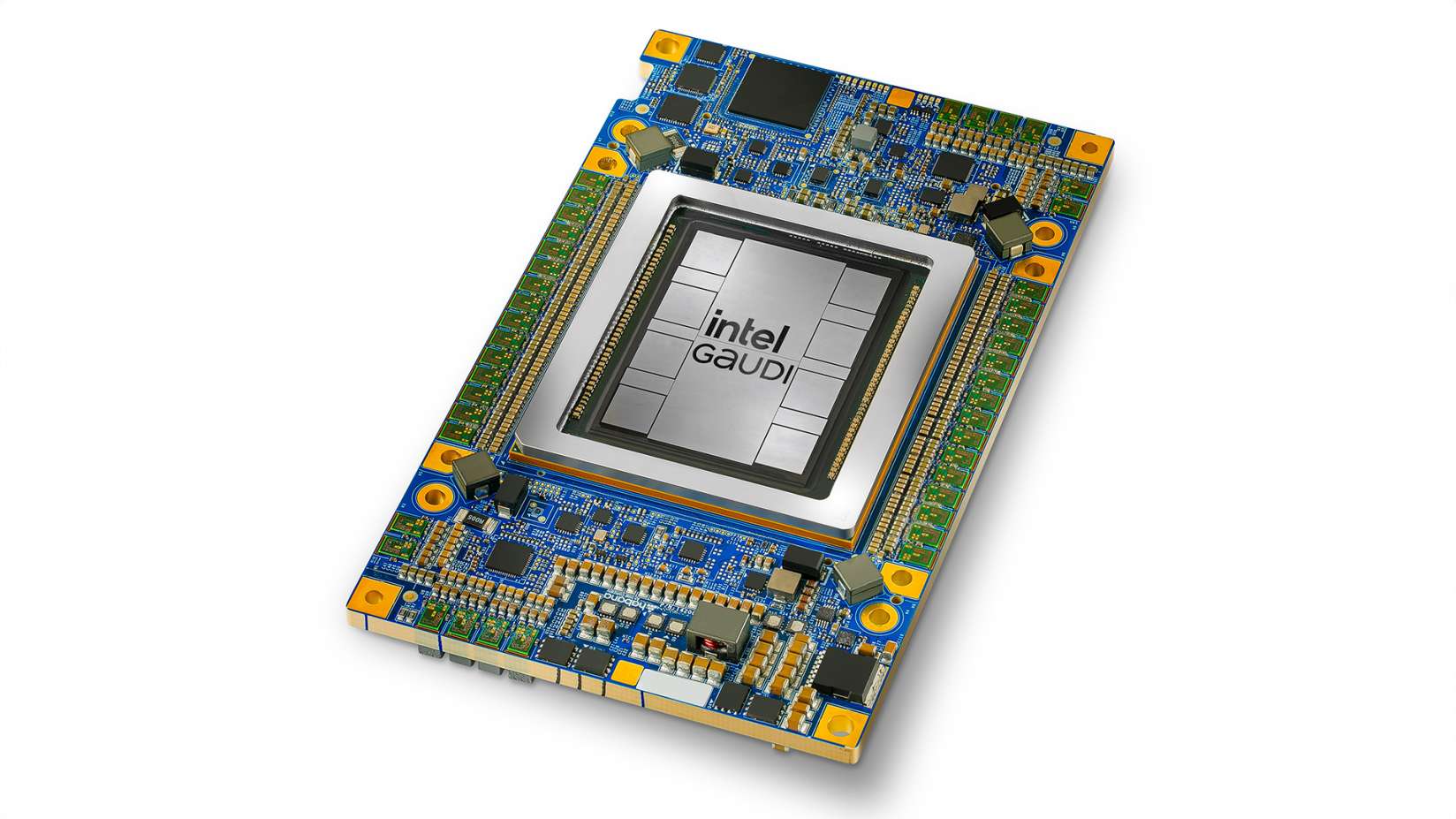

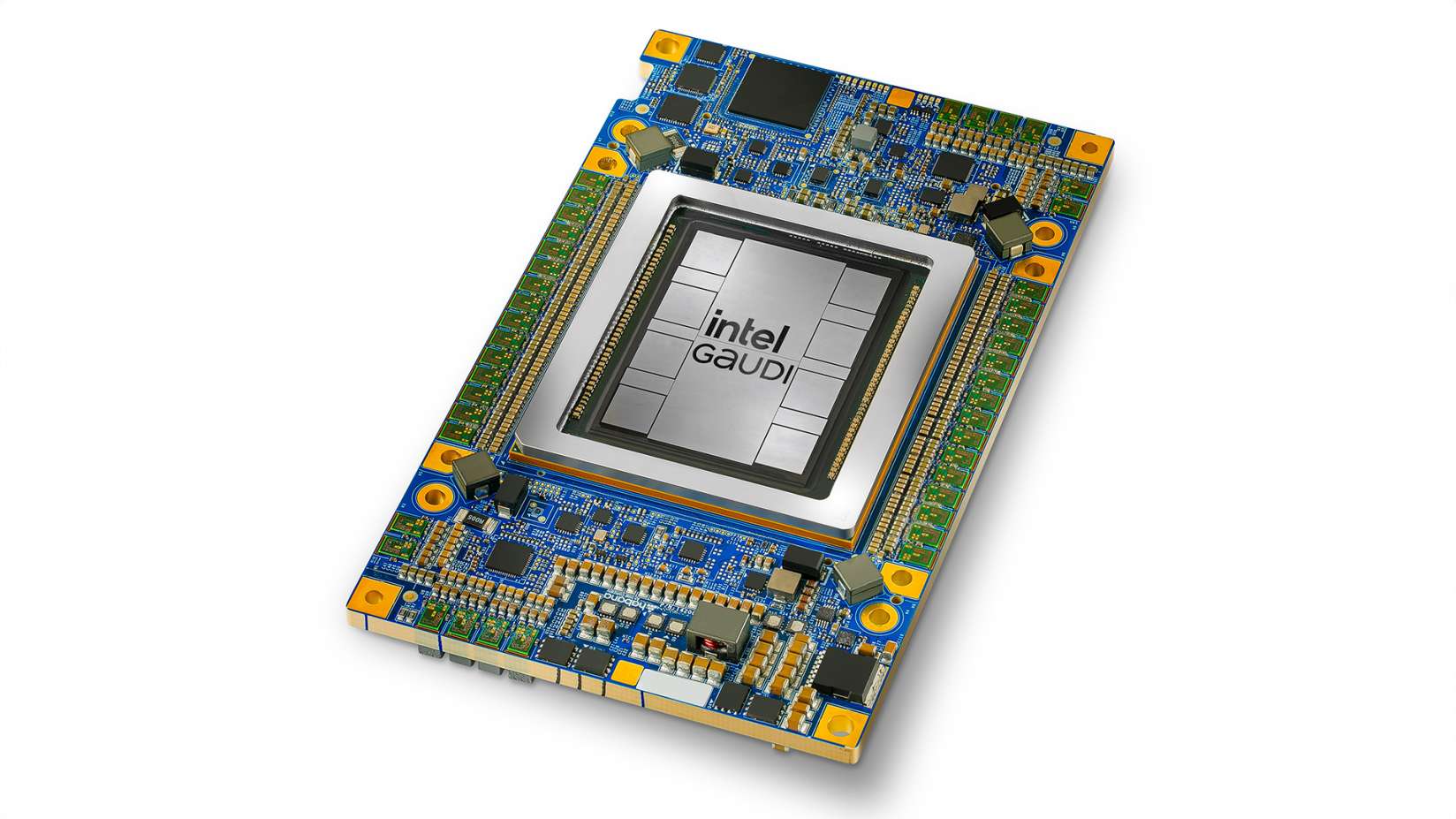

Intel Corp. today debuted a new artificial intelligence chip, the Gaudi 3, that it promises will provide up to four times the performance of the company’s previous-generation silicon.

The chipmaker detailed the product at its annual Intel Vision 2024 conference, where it also shared an update about its AI strategy. Intel plans to work with partners to expand the availability of AI hardware systems that incorporate components from multiple suppliers. Against the backdrop of the event, rival Advanced Micro Devices Inc. enhanced its own AI processor lineup with new systems-on-chip geared towards the connected device market.

The Gaudi 3 is the third iteration of a processor series Intel obtained through a $2 billion startup acquisition in 2019. Compared with its predecessor, the new chip promises to provide four times faster performance when crunching data in the BF16 format, which is widely used by AI applications. It also has more network bandwidth, which means Gaudi 3 chips deployed in the same AI cluster can more quickly exchange data with another.

The chip carries out calculations using two sets of onboard cores. The first core type, TPC, is optimized to speed up several types of computations that deep learning models commonly perform while processing data. Those computations include batch normalization, an operation that speeds up deep learning models by turning the raw input data they receive into a more well-organized form.

The Gaudi 3 also includes so-called MME cores. Those cores are likewise designed to accelerate computations that AI models use to process data, but focus on a different set of calculations than the TPC cores. One task that the MME circuits can speed up is the process of running convolutional layers, which are software building blocks commonly found in image recognition models.

The Gaudi 3 features 64 TPC cores and eight MME cores spread across two dies, or semiconductor modules. Those modules are linked together in a way that allows them to function as a single chip. They’re supported by 128 gigabytes of onboard HBM2e memory, a type of high-speed RAM that allows AI models to quickly access the data they require for calculations.

Intel made the previous-generation Gaudi chip using Taiwan Semiconductor Manufacturing Co. Ltd.’s seven-nanometer process. With the Gaudi 3, the company has switched to a newer five-nanometer node. The latter technology makes it possible to produce faster, more power-efficient transistors.

Intel says eight Gaudi 3 chips can be installed in a single server. According to the company, each chip includes 21 Ethernet networking links that it uses to exchange data with the neighboring Gaudi 3 units. There are also three more networking links, for a total of 24, aboard each processor that allow it to interact with chips outside its host server.

Intel says that the Gaudi 3 can outperform not only its previous-generation silicon but also Nvidia Corp.’s H100 graphics card. In an internal evaluation, the chipmaker determined that the Gaudi 3 can train some versions of the popular Llama 2 large language model up to 50% times faster. It also promises to enable up to 30% faster inference than the H200, an enhanced version of Nvidia’s H100 chip that is specifically optimized for LLMs.

“Enterprises weigh considerations such as availability, scalability, performance, cost and energy efficiency,” said Justin Hotard, executive vice president and general manager of Intel’s data center and AI group. “Intel Gaudi 3 stands out as the GenAI alternative presenting a compelling combination of price performance, system scalability and time-to-value advantage.”

At the Intel Vision event where Gaudi 3 made its debut today, the chipmaker provided an update about its AI strategy. Intel said that it’s teaming up with more than a dozen partners including Red Hat and SAP SE to create an “open platform for enterprise AI.” The initiative’s goal is to give companies access to AI-optimized systems that incorporate hardware and software from multiple suppliers.

According to Intel, those systems will be optimized to run AI models with RAG features. RAG, or retrieval-augmented generation, is a machine learning technique that allows an LLM to absorb new information and incorporate it into its responses without a costly retraining process.

As part of the initiative, Intel will release reference implementations demonstrating how servers with Gaudi and Xeon chips can be used to run AI workloads. It will also add more infrastructure capacity to its Tiber Developer Cloud. The latter offering is a cloud platform that Intel customers can use to train and run AI models using the chipmaker’s processors.

Against the backdrop of Intel Vision, rival AMD announced two new chip lineups. They’re primarily designed to power edge computing devices such as smart car subsystems. Both of the new chip lineups join the company’s existing Versal product portfolio, which it obtained through its $50 billion purchase of Xilinx in 2022.

All the processors in the Versal portfolio include two types of circuits. There are circuits built for a specific set of tasks, such as running AI models or processing sensory data. Each Versal chip also includes adaptable compute modules that customers can adapt for their specific requirements. Those modules are based on FPGA, or field programming gate array, technology originally developed by Xilinx.

The first of the two Versal chip families that AMD debuted today is called the AI Edge Series Gen 2. Each processor in the family includes three sets of compute modules. There are central processing unit cores based on an Arm Holdings plc design, AI-optimized circuits and customizable FPGA modules. The FPGA circuits can turn data from the sensors in a connected device into a form that is easier to process for the device’s onboard AI models.

One early adopter of the Versal AI Edge Series Gen 2, Subaru Corp., plans to install chips from the lineup in several of its cars. The plan is to use the processors to power an advanced driver-assistance system called EyeSight. The system provides safety features such as adaptive cruise control and automated braking.

AMD detailed the AI Edge Series Gen 2 today alongside another new chip line called the Prime Series Gen 2. It features a similar design as the former product family, but doesn’t include AI-optimized compute modules. Each chip in the Prime Series Gen 2 lineup features Arm-based CPU cores, modules optimized to process video streams and customizable FPGA circuits.

THANK YOU